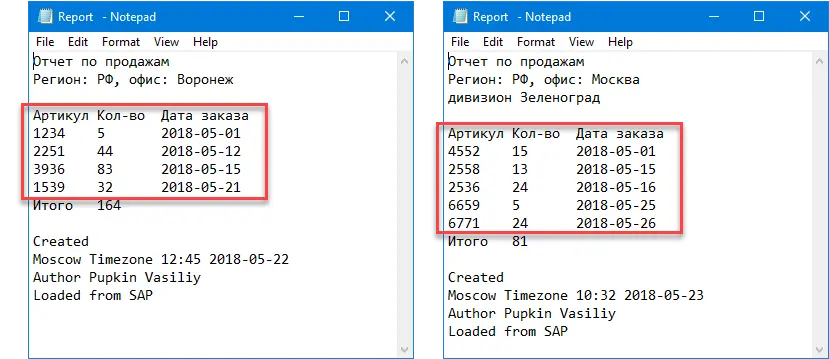

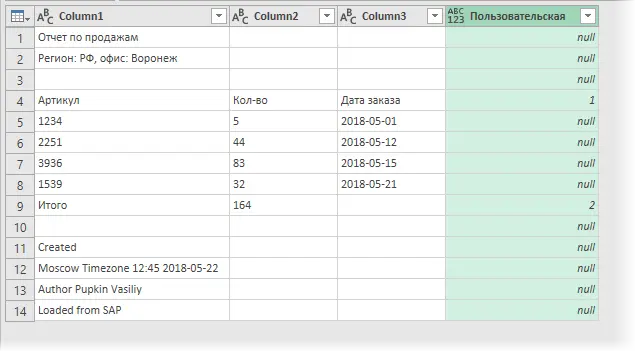

Sometimes there are situations when it is not known in advance exactly how many and which rows need to be imported from the source data. Suppose we have to load data from a text file into Power Query, which, at first glance, does not present a big problem. The difficulty is that the file is regularly updated, and tomorrow it may have a different number of lines with data, a header of three, not two lines, etc.:

That is, we cannot say in advance with certainty, starting from which line and exactly how many lines need to be imported. And this is a problem, because these parameters are hard-coded in the M-code of the request. And if you make a request for the first file (importing 5 lines starting from the 4th), then it will no longer work correctly with the second one.

It would be great if our query could itself determine the beginning and end of the “floating” text block for import.

The solution I want to propose is based on the idea that our data contains some keywords or values that can be used as markers (features) of the beginning and end of the data block we need. In our example, the beginning will be a line starting with the word SKU, and the end is a line with the word Total. This row validation is easy to implement in Power Query using a conditional column – an analogue of the function IF (IF) в Microsoft Excel.

Let’s see how to do it.

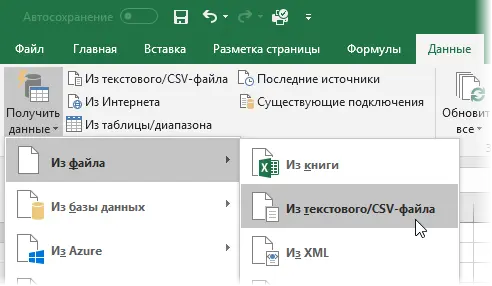

First, let’s load the contents of our text file into Power Query in the standard way – through the command Data – Get data – From file – From text/CSV file (Data – Get Data – From file – From text/CSV file). If you have Power Query installed as a separate add-in, then the corresponding commands will be on the tab Power Query:

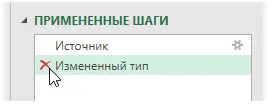

As always, when importing, you can select the column separator character (in our case, this is a tab), and after importing, you can remove the automatically added step modified type (Changed Type), because it’s too early for us to assign data types to columns:

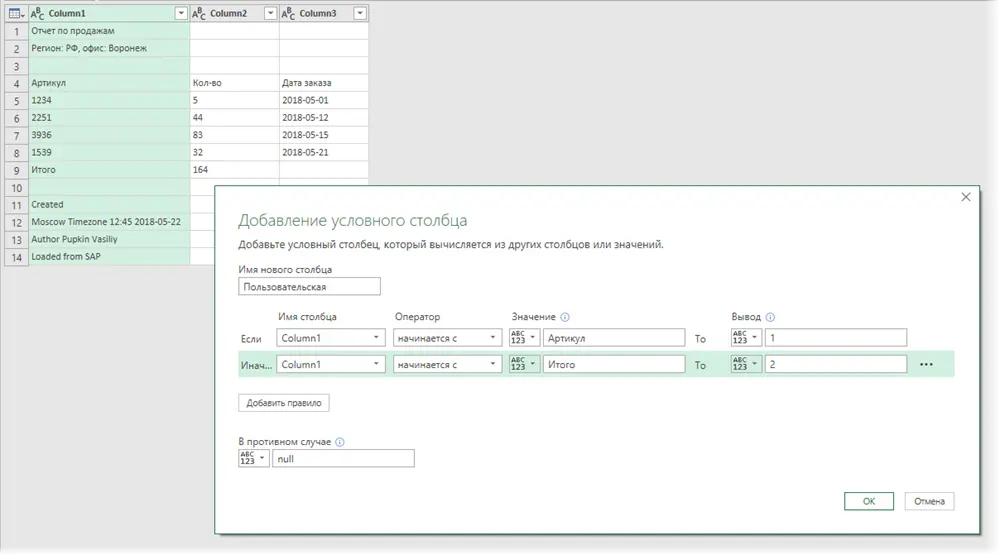

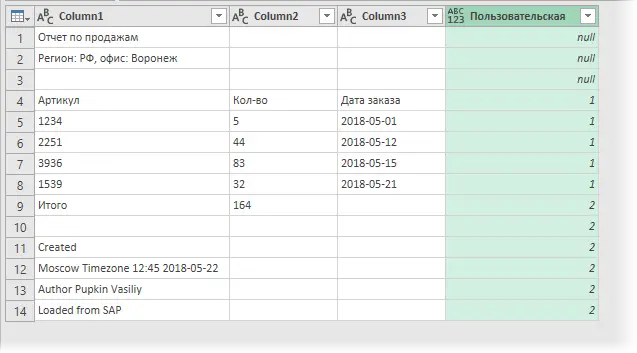

Now with the command Adding a Column – Conditional Column (Add Column — Conditional Column)let’s add a column with checking two conditions – at the beginning and end of the block – and displaying any different values in each case (for example, numbers 1 и 2). If none of the conditions is met, then output null:

After clicking on OK we get the following picture:

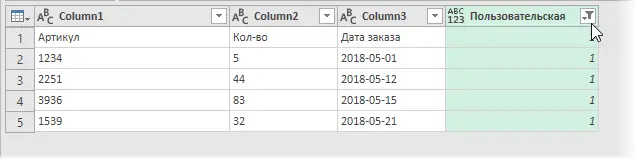

Now let’s go to the tab. Transformation and choose a team Fill – Down (Transform – Fill – Down) – our ones and twos will stretch down the column:

Well, then, as you might guess, you can simply filter the units in the conditional column – and here is our coveted piece of data:

All that remains is to raise the first line to the header with the command Use first line as headers tab Home (Home – Use First Row as Headers) and remove the unnecessary more conditional column by right-clicking on its header and selecting the command Delete column (Delete Column):

Problem solved. Now, when changing the data in the source text file, the query will now independently determine the beginning and end of the “floating” fragment of the data we need and import the correct number of lines each time. Of course, this approach also works in the case of importing XLSX, not TXT files, as well as when importing all files from a folder at once with the command Data – Get data – From file – From folder (Data — Get Data — From file — From folder).

- Assembling tables from different files using Power Query

- Redesigning a crosstab to flat with macros and Power Query

- Building a Project Gantt Chart in Power Query