Contents

Movie rentals have been hit hard by the lockdown. Fiction movies thanks to streaming platforms have not become smaller, rather the opposite. Satisfying the demand for content would be impossible without the use of modern technologies

Pandemic and film production

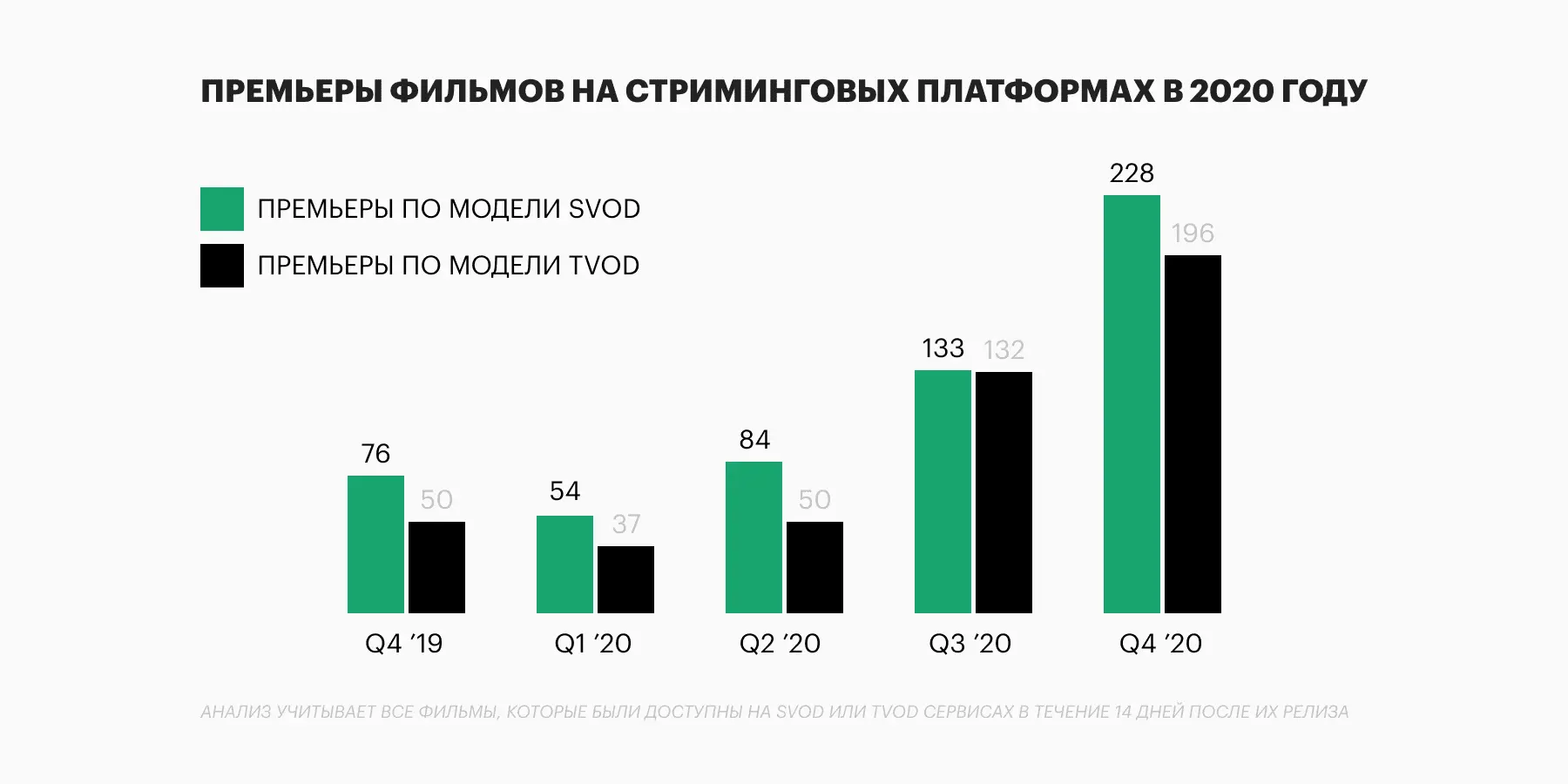

The COVID-19 pandemic has greatly shaken the industry and accelerated its transformation. The number of new film releases for theatrical distribution in North America in 2020 dropped sharply, from 329 compared to 792 the previous year. At the same time, the number of releases on streaming services under the SVOD model – access to video by subscription (Netflix and others) has tripled over the same period, and release on TVOD platforms – access to video for a set fee (iTunes) – has almost quadrupled.

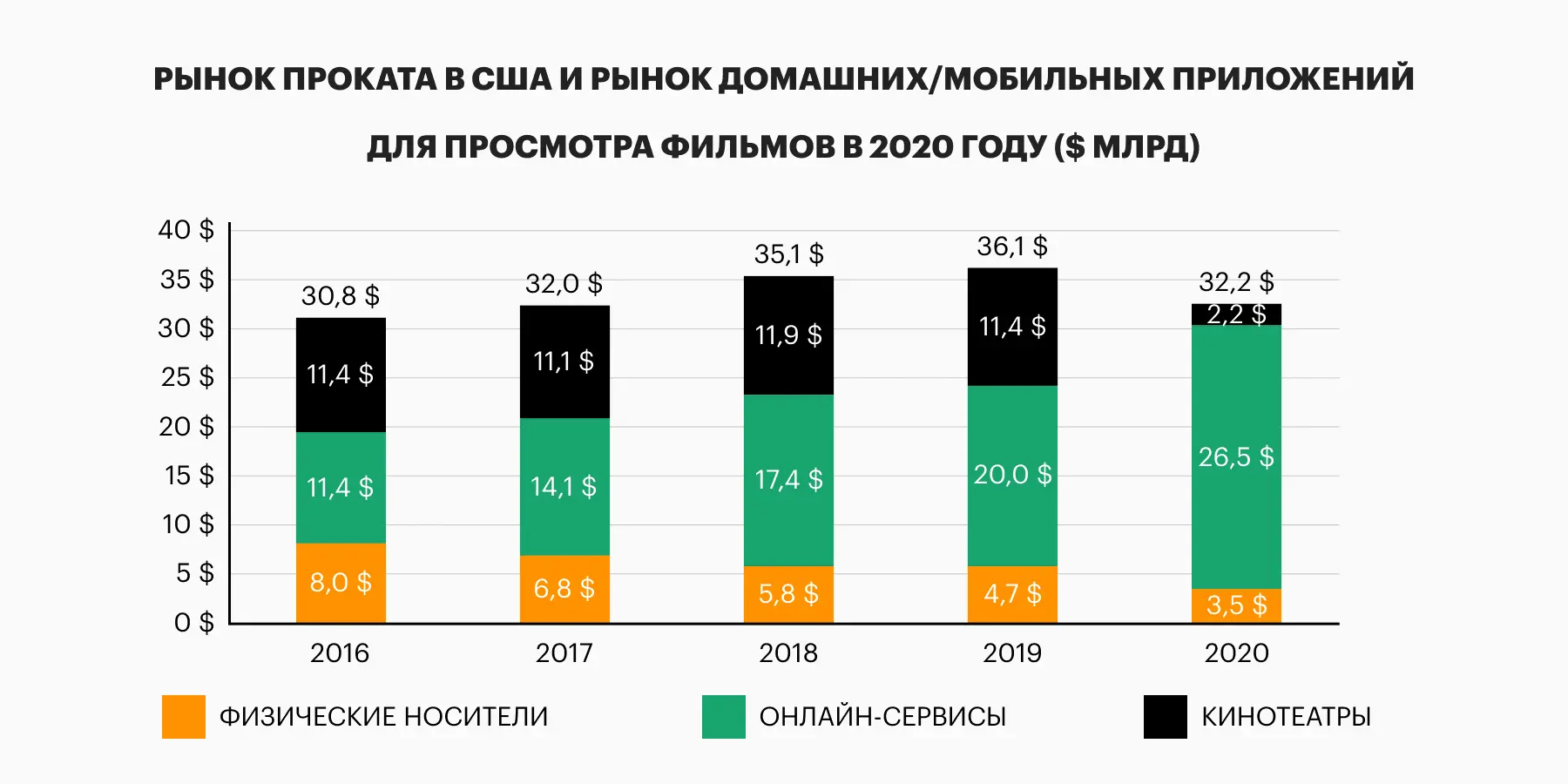

Other indicative figures are given by The Hollywood Reporter. The number of online service subscribers worldwide in 2020 exceeded 1 billion people, an increase of 26% compared to 2019. It brought to the companies $68,8 billion of revenue (growth by 23%). At the same time, box office receipts in the United States fell by 80%, and worldwide by 72%.

The pandemic has pushed film production budgets up 10% to 20%, according to producer Jason Bloom (The Purge, Us, Split, Astral 3 & 4, Obsession). For example, insurance has increased, security protocols for COVID-19 have been introduced. In 2019, the average cost per film produced by a major studio was $65 million, excluding distribution and marketing.

There are no such budgets for shooting and releasing films and series as before, while the public’s demand for quality content is growing. In order to make more films for less money, it is necessary to connect technologies that allow us to solve these problems. Modern technologies are interesting in that they help to produce a more colorful picture, with bоgreater attention to detail, reduce the cost of filming, production, post-production, make and release content faster. For example: the entire post-production of the third season of Star Trek: Discovery was completely carried out remotely, “from home”.

Film vs digital: opponents of digitalization in cinema

Since 2016, 9 out of 10 feature films have been shot with digital cameras. Digit has opened up vast opportunities for editing, detailing and creating visual effects that are becoming more complex and diverse. At the same time, many eminent directors are still shooting on film and are not going to give up their positions. It looks like the situation with photography – everyone shoots digitally, because it’s cheaper and easier, but “for the soul” and a special effect, the creators take the film.

The main advocate and admirer of the film is Christopher Nolan. The director is sure that in modern cinema it is necessary to combine digital and film shooting. For the release of “Dunkirk” Nolan even agreed with 125 cinemas across America – where the film was shown “in the original” – on 70 mm film.

In addition to Nolan, Quentin Tarantino (“The Hateful Eight”), JJ Abrams (“Star Wars: The Force Awakens”), Wes Anderson (“The Grand Budapest Hotel”) are filmed.

Filmed in 2020, including widescreen and IMAX: A Quiet Place 2, No Time to Die, Wonder Woman 1984, King of Staten Island, West Side Story, The Banker ”And a dozen more iconic films. Incidentally, Kodak has renewed its film deal with Disney, NBC Universal, Paramount, Sony and Warner Bros.

What new technologies allow in cinema

Technologies that can be called the new industry standard:

- VFX (visual effects): complement or completely change the picture on the screen.

- 4K + 3D makes the picture smoother, an example is Lucid VR’s LucidCam, billed as “the first and only camera for real-time 4K-3D-VR production.”

- Autonomous drones for filming, and we are talking about devices with built-in algorithms for self-selecting the frame size and shooting angle.

Financially successful or festival films can already be made entirely on a smartphone. Steven Soderbergh filmed his 2019 sports drama Bird of High Flying on an iPhone 8 with minor modifications, just like his previous film, Out of Mind. True, in this case, he used a special effects plugin to simulate the old film.

A popular VFX technology is chroma key, which allows you to finish the necessary things in post-production, while the background or object is replaced with a green cloth during shooting. Chromakey is used everywhere: from the weather forecast to the world’s blockbusters. So dragons appeared in the Game of Thrones, Davy Jones from Pirates of the Caribbean grew a tentacle beard, and Harry Potter flew on a broomstick.

Quality VFX is time-consuming and expensive: film companies spend years and millions of dollars on beautiful effects. So, the special effects for Avengers: Infinity War were created by 15 studios and 2 people. Some of these functions can already be taken over by a neural network.

What can a neural network do in movies?

Deep fakes

Deepfakes are fake videos, usually featuring celebrities, that are created by artificial intelligence. The technology appeared in the 1990s, but gained popularity with the development of machine learning. Deepfakes are as entertaining as they are dangerous: for example, a video where Mark Zuckerberg allegedly confesses to stealing user data went viral on the Internet in June 2019 and made a lot of noise around Facebook.

All of Hollywood is working closely with deepfakes, but Disney has made great strides. In our country, deepfakes have already managed to rejuvenate Pavel Maykov for the series “Contact”. With the help of face-de-aging technology, the actor was returned 15 years ago – the neural network smoothed out the wrinkles on the forehead, which betrayed the character’s age. The same techniques were used in The Irishman and Terminator: Dark Fate.

The second life of actors

Technologies make it possible to copy the speech, appearance and facial expressions of a person, opening the way to digital immortality and “cloning” on the screen of people who have long passed away, thanks to the work of a neural network and CGI graphics. Special programs (the simplest and most accessible to the average user – FakeApp or DeepFaceLab) create realistic images of a person based on footage, audio recordings, photos, and animators insert them into the film.

In Rogue One: A Star Wars Story. History resurrected Peter Cushing. His digital copy 22 years later appeared on the screen in the role of Governor Tankin. The model was made based on the appearance of the actor Guy Henry, similar to Cushing.

But with another story of a miraculous return to the world of the living, everything is not so smooth. When in 2019 the creators of the film “Finding Jack” decided to return James Dean, an actor who died in 1955, to the screen, society was outraged. In response to the news, angry and frustrated comments rained down in The Hollywood Reporter:

A digital copy of James Dean appeared in Finding Jack anyway, and the debate about the ethics of such technology hung in the air. On the one hand, many families of deceased actors scan their faces so that they continue to “act” and the relatives receive a fee.

On the other hand, for example, in the case of Carrie Fisher, the creators of Star Wars did not resort to neural networks, but hand-drawn Leia Organa to honor the memory of the actress.

Technologies in post-production

The smartphone is able to collect mini-movies from photos and videos, overlay light music and some text. This is how the simplest neural network-editor works. In theory, she can make not only amateur videos, but also real films. Although so far the world has not seen a movie completely edited by a neural network.

Also due to future opportunities in editing, digital video surpasses the capabilities of film – post-production is easier. Previously, adding visual effects was a delicate art, the special effect had to blend seamlessly with the rest of the material. Modern software (AVID, Premiere and others) greatly facilitates the task, allowing editors to work with entire sections of the picture.

Algorithmic film editing technology without human intervention is on the way. The program follows the script, sorts and labels takes, using hardwired knowledge from editing textbooks.

Cloud storage is used as virtual editing studios (again, the pandemic has accelerated this trend). When the project is stored in the cloud, you can not be afraid that someone will accidentally delete it from your computer or close the window without saving. The work on the film “moves” into the digital space, so studios from different parts of the world can work on the same project at the same time.

AI in the writer’s chair

If an actor can be replaced by a digital copy, then so can a screenwriter. Artificial intelligence is not yet writing scripts for mass cinema, but the first experiments with short films from AI are already in the CIS and Europe.

The analytical company Ars Technica in 2018 released a short film Zone Out, the script for which was written by a neural network. Artificial intelligence Benjamin made a film in 48 hours from fragments of old cinema and clips recorded by professional actors on chroma key. The film turned out to be not of the highest quality – women have mustaches on their faces every now and then, and the characters sound like robots.

Another story about an AI filmmaker is from the CIS. Ukrainian programmer Vladimir Alekseev created a two-minute short film “Empty Room” using artificial intelligence. Neural networks wrote the script, music and selected actors. Actors were chosen and voiced by artificial intelligence. True, according to the author of the film, the mechanical voice of the neural network did not sound emotional in some dialogues, and they helped to revive it at Replica Studios. The soundtrack for the film, reminiscent of the works of Mozart, was generated by the JukeBox neural network. Alekseev himself only edited individual frames into the film.

Virtual reality

James Kline, Head of Design at Lucasfilm, was responsible for designing the train robbery scene for Han Solo: A Star Wars Story. Story”. He helped design the Imperial Train, which was to be the main set piece for this episode. The question was how the actors would be able to act out the jumping scene on the virtual object.

For Kline, the answer was Lucasfilm’s Virtual Scout technology, which is commonly used by designers to take virtual tours of their computer-generated creations. The application helps designers to feel the volume or see the details of their design in motion, and is also used by filmmakers who want to visualize shots in a virtual space. For the train scene, the actors wore VR goggles to help them capture the action in the pavilion as if it were being performed on a real facility.

Founded by James Cameron and visual effects master Stan Winston, Digital Domain uses virtual reality to provide directors, actors and production teams with advanced tools to help visualize the action using multiple camera streams and even a virtual cameraman.

Without all these technologies, Hollywood (and not only it) simply would not be able to shoot so many new films. They simplify and make less expensive the process of filming, editing and editing, distribution and storage.

вау, интересно